Software testing is complex and resource-intensive. Naturally, you want to optimize your testing process to get the best quality on your testing investment. The seven principles of testing help you set optimum quality standards and give you and your customers confidence that the software is ready for action.

1. Testing shows the presence of defects, not their absence

If your QA team reports zero defects after the testing cycle, it does not mean there are no bugs in the software. It means that there could be bugs, but your QA team did not find them. The reasons for not detecting any defects could be many, including the most common one - the test cases do not cover all scenarios.

This principle helps in setting stakeholder expectations. Don't make claims that the software is defect-free.

2. Exhaustive testing is impossible

Consider a simple real-world example in which you have a screen that takes two numbers as input and prints their sum. It would take infinite time to validate this screen for all possible numbers.

If a simple screen like this is virtually impossible to test exhaustively, what about a full-fledged application?

Trying to test exhaustively will burn time and money without affecting the overall quality. The correct way is to optimize the number of test cases using standard black-box testing and white-box testing strategies.

3. Early testing saves time and money

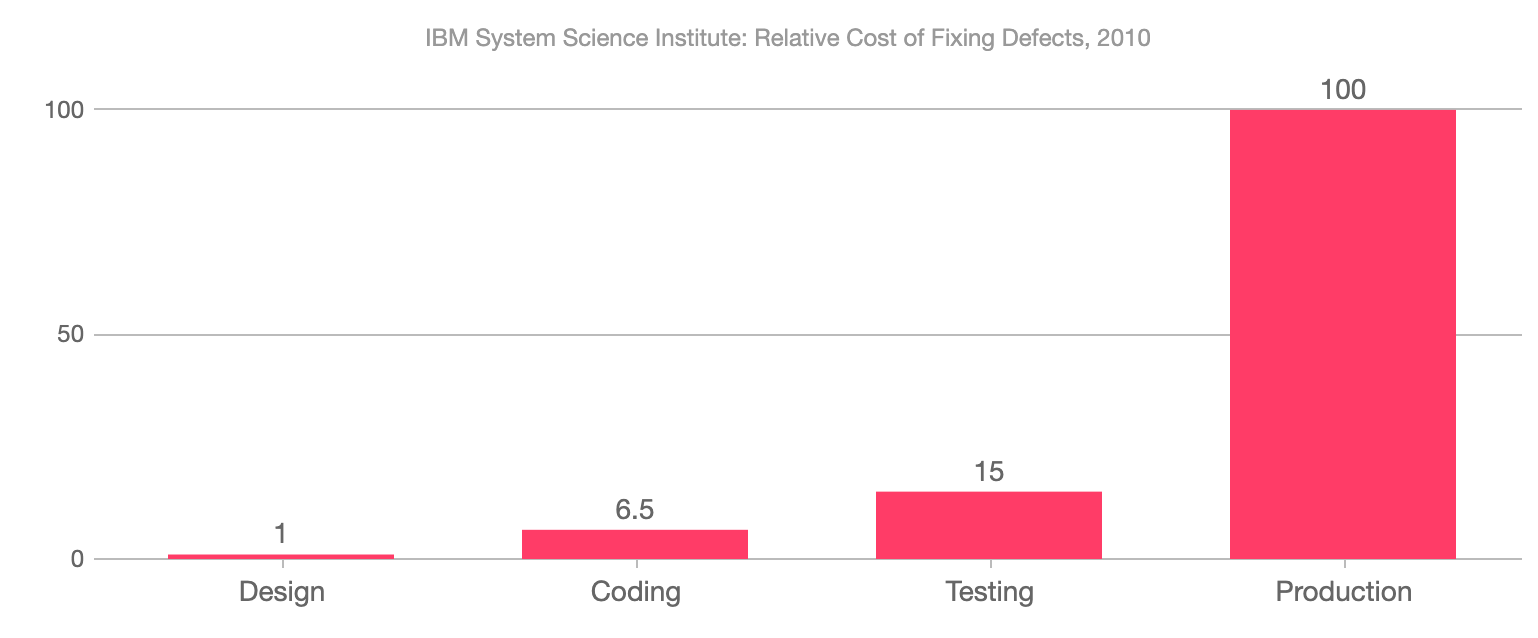

The proverb: a stitch in time saves nine, applies to most things in life, and software testing is no exception. As shown in a study conducted by IBM, what costs a dollar to fix in the design phase can cost fifteen in the testing phase and a whopping hundred if detected in a production system.

Early testing also saves time. Unit testing and integration testing can quickly reveal design flaws, which can cause massive delays if detected later during system testing.

4. Defects cluster together

This principle is an example of the 80:20 rule (also called the Pareto principle) – 80 percent of defects are due to 20 percent of code. While most believe this is some divine mandate, it is based on the observation that 80 percent of users use 20 percent of the software. It is this 20 percent of the software that will contribute most towards the defects.

This principle can help your team focus on the right area - the most popular software part.

5. Beware of the pesticide paradox

The use of a pesticide makes pests immune to its use. Similarly, subjecting a module to the same test cases can make the module immune to the test cases. This principle is compelling while testing complex algorithms.

For example, consider a resource scheduling software that schedules resources for tasks based on their work timings, time zones, and holidays. Testers wrote ten test cases related to scheduling, and after four rounds of testing, all the test cases passed. Does this mean the module is defect-free? Probably not, since it took four cycles to clear ten bugs.

What's the solution?

Rethink and write more and better test cases, especially when it is a crucial part of the application. Preferably, use a code-coverage tool to ensure that your test cases cover all code paths.

6. Testing is context-dependent

There is no one-strategy-fits-all in software testing. Yes, testing a web application and ATM will be different but what this principle says is more profound.

For example, consider two companies making a ten-pack battery set. The first one is a premium one costing $50 while the other costs $5 - ten times cheaper.

Do you think it makes sense for both companies to have the same QA processes? The answer is obvious - no. The first company is charging for quality and hence will have more robust standards and quality control.

In your application, don't go for strategies without understanding your cost of quality - you don't want to spend more on quality than what you get in return. Put quality in the context of your product offering.

7. Beware of the absence-of-errors fallacy

It is a common belief that a low defect rate implies the product is a success. This idea is the absence-of-errors delusion.

Zero defects do not mean the software solves end-user problems successfully. Linux always had very few bugs, while Microsoft Windows was (is?) notorious for its bugs. However, most people used Microsoft Windows as their operating system because they found it easier to use and solved their problems better. Linux is becoming more and more mainstream today as it started focusing on end-user experience.